Top 5 Best Practices for Kubernetes Persistent Volume Management

Kubernetes supports stateful applications with a persistent volume (PV) that holds business-related data. A PV can be configured with read-only, read-write, or read-write-many modes and may specify storage capacity and access methods.

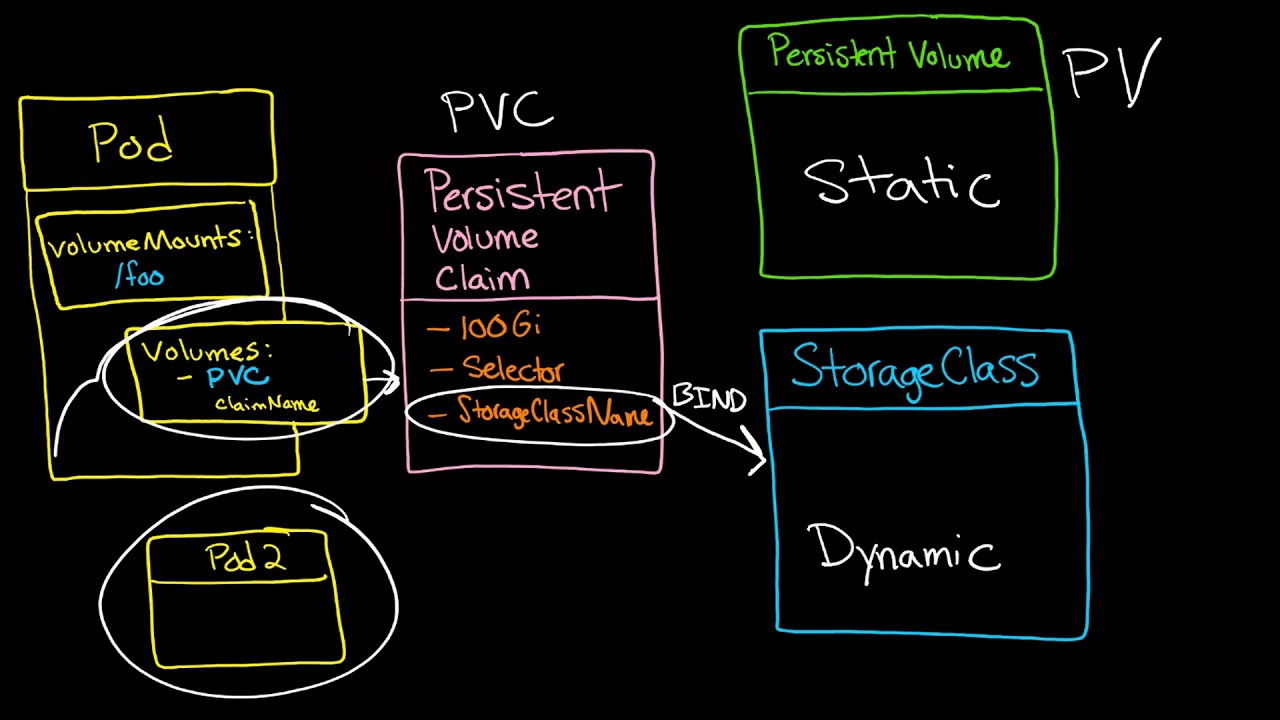

PVs can be provisioned statically via administrators or dynamically based on PVC requests defined by users via StorageClass objects. Each StorageClass references a provisioner, determining what volume plugin will be used to provision a PV.

Use Dynamic Provisioning

Kubernetes provides a simple way for developers to obtain persistent storage using PersistentVolumeClaims (PVCs). PVCs can be created statically, manually by admins, or automatically via dynamic provisioning. Once a PVC is fulfilled, Kubernetes searches for a PV that matches the PVC specifications and binds it to the PVC. Once bound, the PV becomes exclusive to the Pod that specified it. This provides some benefits.

Dynamic provisioning is one of the kubernetes persistent volume best practices that can greatly reduce the time it takes to deploy stateful applications in a cluster. It also eliminates the need to manually pre-provision PVs and can provide a more optimized performance by creating a PV only when necessary.

The main benefit of dynamic provisioning is that it decouples the underlying storage from the pods that use it. This enables the volume to be restored even when all the pods that reference it are deleted. It also allows a dynamically provisioned volume to be remounted into a new pod with the same access mode it originally assigned (ReadOnlyManyPod, ReadWriteOncePod, or ReadWriteManyPod). This helps prevent the CrashLoopBackOff error caused by a misconfigured mount configuration.

Define a Reclaim Policy

Kubernetes Persistent Volumes provide non-volatile data storage independent of pod lifecycles. This allows you to confidently deploy application containers while providing the flexibility to manage performance and capacity across the cluster.

Each Pod can request storage via a persistent volume claim (PVC), specifying the amount of storage and access modes required. A PVC-to-PV binding is one-to-one, ensuring that a single Pod never accesses more than its share of the persistent volume.

The PVC-to-PV binding also has a field called reclaimPolicy, which indicates what happens when the PVC is released. This can be set to Retain, meaning that the volume is retained until it is manually deleted; Recycle means that the volume’s data is scrubbed and saved in a backup file system; or Delete, which permanently deletes the persistent volume.

The reclaimPolicy object uses the StorageClass field to create dynamically provisioned PersistentVolumes. When a user creates a PersistentVolumeClaim, StorageClass checks the reclaimPolicy. If the value is Delete, it automatically deletes the PersistentVolume as soon as the user’s Pod stops using the PVC.

Define a Storage Class

When a developer creates a PersistentVolumeClaim (PVC) in the cluster, Kubernetes matches it to a suitable volume based on its specifications. It checks the volume’s access mode, requested storage size, and storage class. This is known as dynamic provisioning because the PVC isn’t bound to a specific volume until a Pod uses it.

If the Pod scheduler chooses to use it, the PVC becomes a bound volume for that Pod, respecting Pod scheduling constraints such as anti-affinity and zone selectors. The control plane also checks that the Pod meets other requirements, such as the volume’s capacity and access mode.

A cluster administrator needs to define a storage class to enable dynamic provisioning. This YAML file includes the underlying storage provider’s plugin and required attributes. The allow volume expansion attribute is important, as it lets the underlying storage provide on-demand volume expansion. The default storage class for your cluster’s underlying storage will likely not support this feature, so you should check the vendor documentation to learn how to enable it. When the StorageClass is created, it is referred to by the PVCs in the cluster by its storageClassName.

Use Trident

Trident enables storage administrators to provide the appropriate Kubernetes persistent volume (PV) management for their application consumers. It focuses on the higher-level qualities your users seek, not specific hardware platforms.

For example, when a user creates a PersistentVolumeClaim with a reclaim policy of Delete, the deletion removes the PV object from Kubernetes. Also, it destroys the associated storage asset in the external infrastructure. Trident automatically detects that the volume was dynamically provisioned and updates its StorageClass to match the new default StorageClass.

This allows the administrator to restrict access by ensuring that the uid and gid of the Pod that requested the volume match those configured in the storage class, as shown in this video from Red Hat. Additionally, the administrator can wall off access to specific worker nodes by creating a cluster-wide namespace and specifying a security policy that defines that trust boundary through Trident. The video also covers using the tridentate command to view and modify backend objects and storage classes.

Use FlexClone

With dynamic provisioning, you don’t need to know what persistent volumes a developer will need in advance. The cluster administrator creates storage on underlying storage using YAML files, and then developers can request the storage they need with Persistent Volume Claims (PVCs). When the PVC is satisfied, Kubernetes looks for a PV to bind to the Pod and then mounts the PV into the Pod.

When you unbind a PV, it returns to the pool of available PVs and can be reused by another pod. You can also control the life cycle of your storage by defining a reclaim policy on StorageClass.

A common cause of errors such as FailedAttachVolume and FailedMount is a corrupted PersistentVolumeClaim. This must be challenging to diagnose and resolve. If you are experiencing these errors, try scaling the deployment of the affected Pod to 0 instances and debugging the issue with Busybox or another tool to identify which container is using the PV. This allows you to resolve the error before other entities start writing to the same PV.

Read More: For Growing Businesses, it is Essential to Understand Basic Employment Laws